This is the second post in our series on scalable creator marketing strategies. Earlier we talked about the benefits of working with mid-sized content creators, and now we dive deeper into how maximizing the number of content creators in a campaign helps improve performance and predictability.

Performance and predictability are the cornerstones of a modern creator marketing campaign and the building blocks for scale. Performance is obvious: marketing must deliver results. Whether that means app installs, new customers, or even just eyeballs for a brand, it’s what turns the campaign into an ROI-positive investment. But, performance alone isn’t enough.

Marketers are also expected to deliver results consistently and predictably. Month after another.

“How an individual channel performs is affected by so many random variables that even careful planning and research don’t guarantee results”

Unfortunately, predicting content creator campaign performance is notoriously tricky. How an individual channel performs is affected by so many random variables that even careful planning and research don’t guarantee results. Sometimes you hit the jackpot with a viral hit, but also often the channel ends up missing the mark. It’s frustrating, especially because in many cases, there’s nothing we could do about it.

Or is there?

Using data to simulate and test out different strategies

While we can’t know the future, we can tap into historic data [0] and run simulations with it:

-

- Select channels for a hypothetical campaign based on what we would have known then, e.g. what the average video views of the past few videos were

- Check how the next videos for those channels did perform

- Add those videos up to evaluate the hypothetical performance of our campaign

With these simulations, a consistently winning strategy emerges: run the campaign with as many content creators as you can. While individual content creator’s performance is still subject to random fluctuation, the more bets there are, the less fluctuation there is on the campaign as a whole.

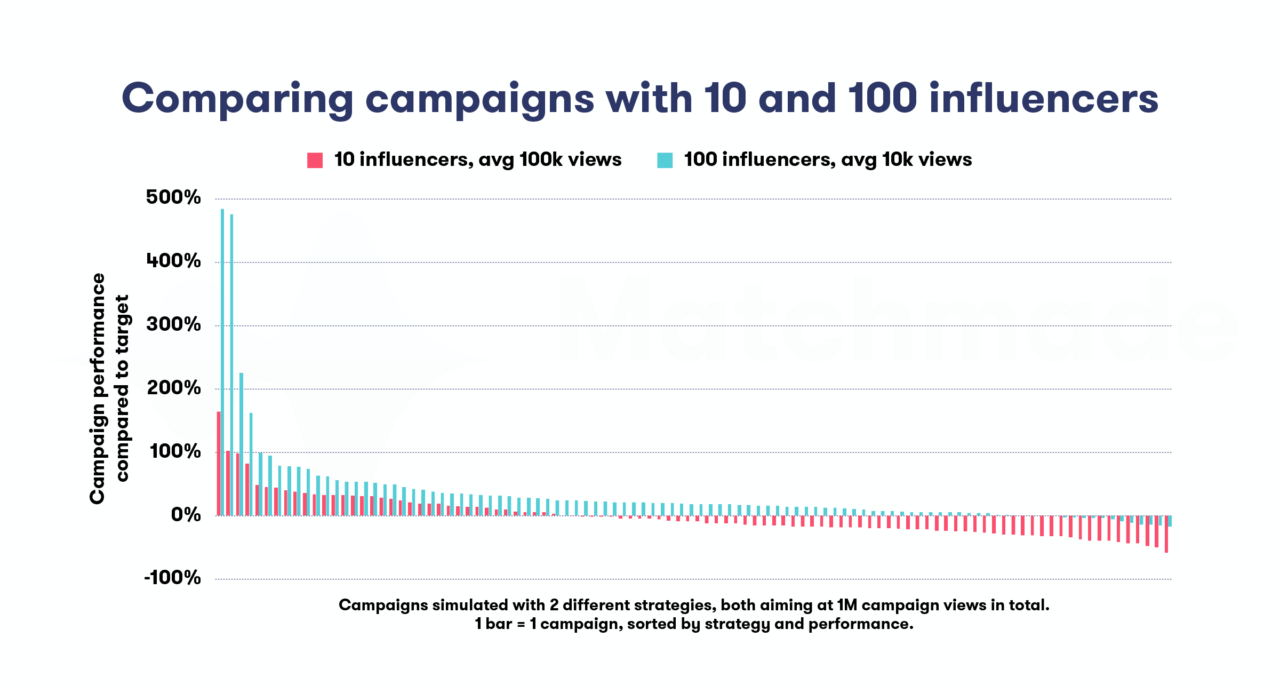

To illustrate this, let’s look at a campaign that aims to get 1 million views [1]. We’ll run the campaign 100 times, trying to fill in the views-goal with different content creator selection strategies: either choosing fewer and larger channels or more and smaller ones.

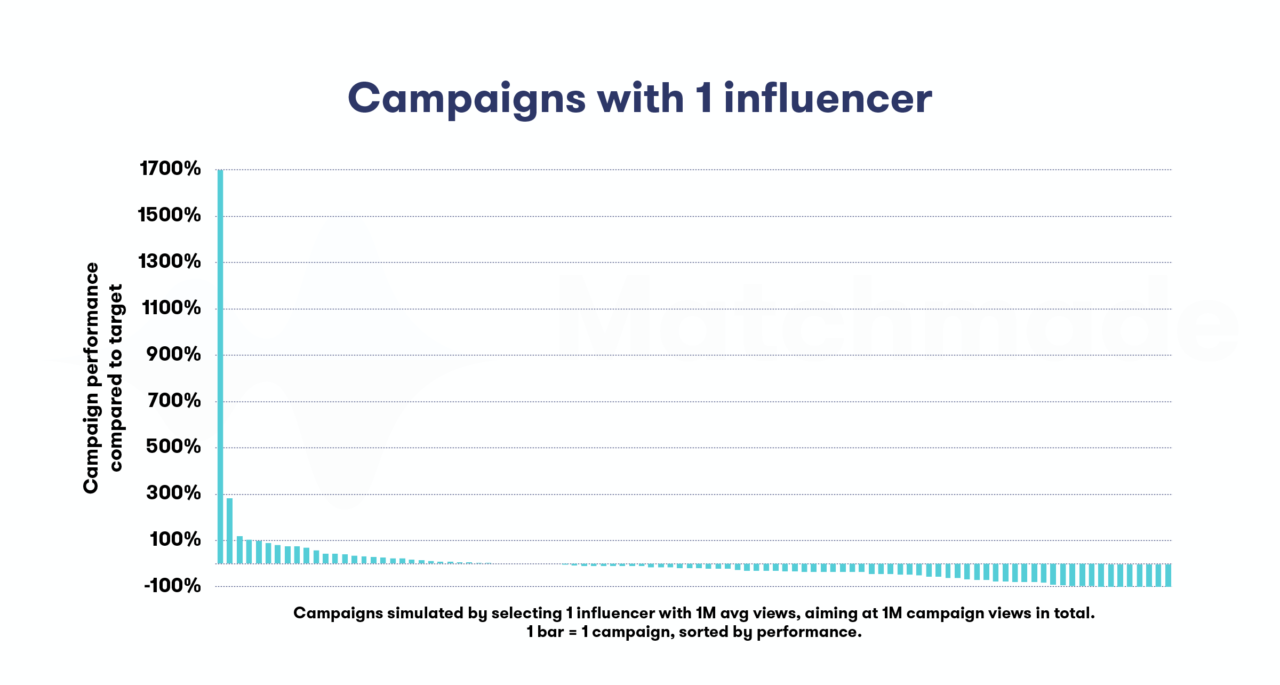

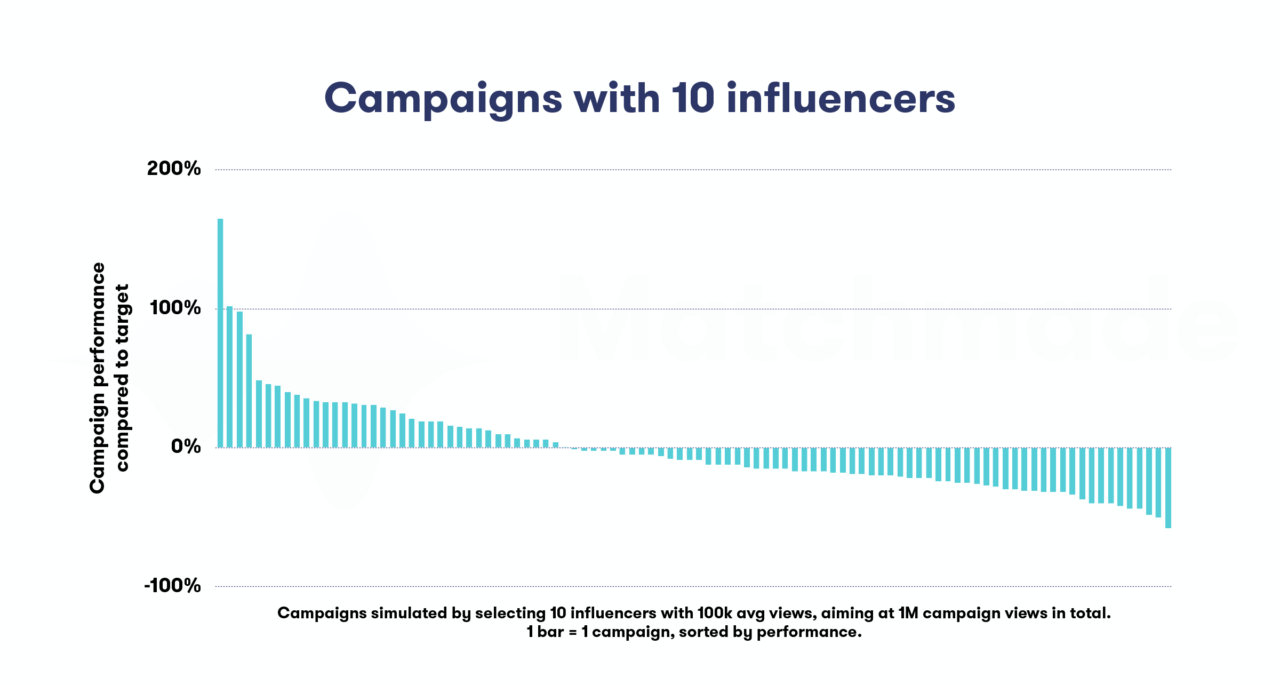

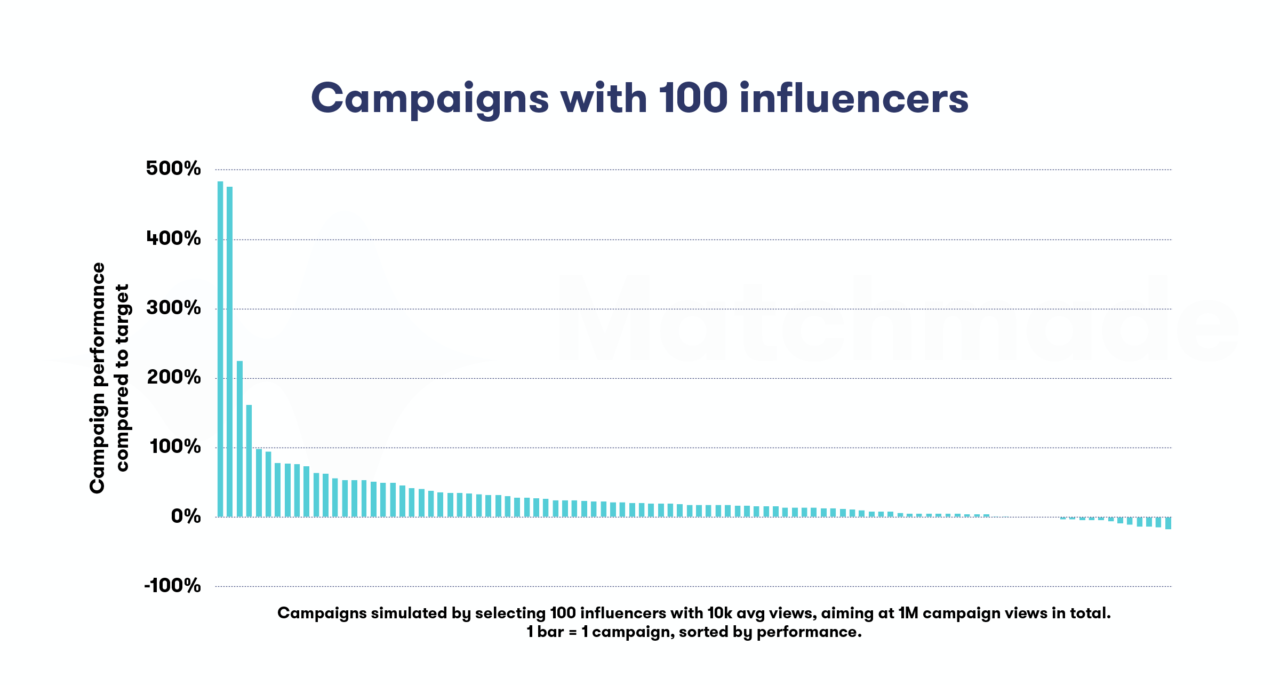

In these charts, each bar is one simulated campaign, and the Y-axis is how much the campaign exceeded or missed the goal of one million views. You can also view it as a probability of the campaign succeeding with this strategy.

Strategy 1: Select just one content creator that on average delivers around a million views

Here’s what happened when we ran the campaign with just one content creator that averages around 1 million views, expecting them to deliver all the needed results all by themselves.

1 out of 100 campaigns was a major hit, but also there was a high likelihood that the campaign would completely tank, depending on how the next video of this one content creator performed. 66% of campaigns working with just one massive content creator performed below expectations.

Strategy 2: Select 10 content creators that average at around 100 000 views each

What if we split the campaign over 10 different content creators, expecting them to total at 1 million views? This strategy yields a bit more balanced results. No crazy hits, but the worst-case scenario was also much better than when we ran the campaign with just one channel.

Overall, splitting the campaign risk over 10 different content creators seems to be way safer than having just one large one.

Strategy 3: select 100 content creators that average at only around 10 000 views each

That data seems promising. When we split the campaign over 100 different content creators, 85 out of 100 simulations reached or exceeded the campaign goal. Even the ones that didn’t meet the mark were still ok, the worst ending at 83% of the goal. The more content creators we add to the campaign, the more likely the campaign is to succeed.

“The more content creators we add to the campaign, the more likely the campaign is to succeed”

Running a campaign with more channels reduces risk and improves predictability

This is the law of large numbers. In more generic terms, it means that if we roll a six-sided die, we can’t know the result of any individual rolls. But, if we roll a large number of dice, or one die many times, we can be pretty sure that the average of their values ends up close to 3.5. The more dice we roll, the higher the probability of this happening is. If you’re interested in diving deeper into this, Wikipedia provides a great starting point.

It’s hard to predict how an individual content creator, or a roll of dice, performs. But, the more content creators we add to the campaign, the more likely it’s that the random fluctuation cancels itself out. With more channels, the results are more likely to be close to what we’d expect. The fewer channels there are, more room there is for random variance that we have no control over.

The simulations above illustrate this. When the campaign depends on just 1 content creator delivering all of the results, the risk is high. The campaign might go unbelievably well, but also 66% didn’t reach the goals, and 13 out of 100 campaigns got less than 10% of the intended results. Contrasting this to the campaigns with 100 different content creators, 85 out of 100 campaigns reached the goal, and even the worst one ended up at 85% of it. That’s a huge difference!

In addition to predictability, we can also boost performance

In addition to more predictable results and lower probability of complete duds, campaigns with more channels also are likely to perform better.

This boils down to two reasons. First of all, the more channels and pieces of content there are, the higher is the chances that one of them is a lucky one and ends up as a viral hit. The highest performing campaigns all had a viral hit or two among them.

The second is asymmetric risk. If a channel performs poorly, the worst that can happen is that there are no viewers at all, and the larger the channel is, the bigger the worst-case hit also becomes. On the other hand, the upside is almost unlimited. If a channel goes viral or is picked by some recommendation algorithm, the viewership will skyrocket.

The other side of the equation: transaction costs

These simulations conveniently omit a significant aspect of a creator marketing campaign: each content creator and deal comes with an overhead. It costs time and money to handle the deal with an content creator, and the more content creators we include in the campaign, the more it’s also going to cost.

It’s a balance between minimizing the costs of negotiations and maximizing predictability through the law of large numbers.

However, technology helps us tip that balance. With better tools and automation, we can lower the transaction costs. Low overhead makes economically feasible to run campaigns with more and more content creators, ultimately resulting in a better and more predictable performance.

The more content creators you work with, the better your odds of success are

Of course, all this doesn’t mean that we should entirely forego human judgment and just blindly choose channels at random as we did in our simulations. We definitely should do preliminary filtering by identifying groups or categories of content creators that are likely to reach our target audience. However, once you’ve identified all the potential content creators, it’s a good idea to go with as many of them as possible, instead of trying to select the best one.

The lesson learned here is, that the more content creators you work with, the better your odds of success are. This is backed by both theory and real-world data. Also, putting this into practice doesn’t need to be expensive or time-consuming. Instead of just the celebrities, working with many mid-tier content creators is a viable option, especially with tooling and automation.

This also means that when reporting and evaluating campaigns, it makes sense to focus on the bottom line instead of the individual posts or videos. Within the individual content creators, there’s bound to be successes and failures that we have no control over. If we obsess over the individual videos, we might lose out on many opportunities and miss the forest from the trees. What ultimately matters is whether or not our campaigns delivered results, so let’s focus on that!

Learn more about how Matchmade can help you by booking a demo call with one of our experts.

Footnotes:

[0] The data is based on 7-day-views of the 950M Youtube videos (from 8M channels) we track at Matchmade. The campaign simulations were run by first filtering channels based on the average 7-day-views of the previous videos. Then out of those randomly sampling the correct number of channels for the campaign. For example, if the 7-day-views for the previous videos of a channel were, starting from oldest, 876, 457, 7659, and 765, the average view for filtering would be based on the first 3 videos (= 2997), and the campaign “result” would be the latest video with 765 views.

The view prediction algorithm we use at Matchmade is more sophisticated than simply taking an average. But, while it’s more resistant to random fluctuation, it’s not immune to it. The strategies discussed here apply even with more sophisticated methods.

[1] While just aiming for views or selecting the channels based on just average views aren’t the most realistic example, it still gives us insight. In the real world, we would have some sort of selection of channels based on style, audience and content that we believe will convert the views into performance, such as app installs. However, in that situation, we still face the choice: out of all the potential channels, we can either choose one we believe to be the best or split the risk over multiple channels. These same strategies still apply, but omitting the initial filtering allows us to focus on the essence here.