Running different kinds of tests has been the bread and butter of performance marketing for a long time. Methods such as split tests (aka A/B tests) or brand and conversion lift studies can be used to learn what actually performs in a mathematically proven way. This allows you to scale your advertising efforts further while improving installs, sales, brand recognition or other critical metrics.

Ideally you would also want to do A/B testing in influencer marketing to systematically build up the success of the channel, rather than relying on one-off “viral hits”, but advertisers typically find this hard to do. Successful A/B testing with creators is possible, but you need to take the unique aspects of the medium into consideration and leverage the right technology.

In this blog post I will answer the following questions:

- Why you should be doing A/B testing in influencer marketing?

- What are the typical challenges?

- What to test – and how the testing differs from paid advertising?

- How to run tests in the right way?

1. Why you should be A/B testing in creator marketing

Systematic testing has traditionally been hard to do in creator marketing. The main reason is that you are typically only running a few sponsorships at a time. The fact that each creator has a very different style and approach to promotions doesn’t help either.

The lack of testing makes it very hard to improve performance in the long run. This is true especially if you want to grow your efforts to a bigger scale. It simply won’t work if you are dependent on very directional results and gut feeling. Doing consistent testing helps you transform influencer marketing from one-off campaigns into a scalable and consistent channel in your marketing toolkit.

While testing can be challenging to get going, it is possible if you use the right automation. This allows you to hire a significant number of influencers at a time, making the results more reliable. But before delving deeper into the mechanics, what can you test?

2. What to test?

When thinking of potential tests, an excellent reference point is paid social (and by extension YouTube ads). Typical test components include things such as bidding, audience, creative, and other elements – such as incentive levels and in-app rewards in game and app promotion.

The table below shows some example tests in paid ads, and what their equivalents could be in creator marketing. This list is by no means exhaustive, but should serve as a starting point for testing. Our team is also more than happy to guide you in your testing efforts. Get in touch so we can discuss the best options for you.

An easy test to start with is testing different kinds of integration lengths. The optimal length of a sponsorship might depend on aspects such as how complex your app, game or product is, what kind of creative assets you can provide to creators (in-game footage, brand film, etc.) or what kind of ads perform best for you in other channels. For this, you would first split the creators into two pools. You then provide each with a creative brief that is otherwise fairly uniform, but the length of the integration would be set differently.

As you can see from the table, the tests are not identical between mediums in most cases. This is because they can’t usually be adopted directly from one medium to the other due two big differences: creative formats and targeting capabilities.

Creative formats in paid ads vs. creator marketing

First, the creative formats and audience expectations are very different in paid social and creator content. In paid social, your ad is a separate content piece. It interrupts what the user is currently doing. Examples of this include Instagram story ads or in-stream video ads on YouTube. The ad experience is also far more uniform, because the ad content is set by you.

In creator marketing, the promotional material is an organic part of the content itself, such as a segment in the YouTube video. While you usually provide some assets and talking points to the creator, they will all create a different kind of content piece. They use their own unique voice that their audience expects from them.

You can see two different integration examples for Farmville 3 from our case study with Zynga below. As you can see, even when provided with the same assets and specifications, the creative outcomes can be very different and still perform well.

This variety of creatives does not mean there aren’t any common elements for testing! We recommend testing parameters such as integration length, brand assets or talking points provided to creators – keeping in mind that you should only test one variable at a time.

Audience targeting in paid ads vs. creator marketing

Audience targeting capabilities are very extensive on paid social. Nowadays the best results are typically achieved by targeting big audiences and letting the algorithms find the most valuable users. In influencer marketing, you are restricted to the audience of the creators you hire. But you may not be as restricted by this as you might think. Especially when working with a large number of creators, you can reach a broad range of demographics, interested in very different topics.

Second, you can’t do clear-cut test cells without any audience overlap in creator marketing. This means you can’t be 100% sure that a person sees e.g. YouTube creator content from only one test cell. In Facebook split testing, you can split the entire universe of Facebook users into two or more segments to ensure a person only sees content from one test cell.

In both mediums, the main rule to remember is to avoid excessively splitting up the audience or making the targeting too granular. This ensures that you reach enough impressions and conversions to make the findings reliable. You can use different kinds of A/B testing calculators (available online for free) to estimate the scale required.

Example: how many views you would need based on your average conversion rate?

Your base view-through conversion rate (CVR) for app installs is 0.2%. You want to detect a statistically meaningful difference in conversion rate of over 10% (view-through CVR below 0.18% or above 0.22%). This means you’d need about 800,000 views per test cell (depending on the level of certainty you want). As this example shows, splitting the test cells unnecessarily or limiting the targeting can make reaching meaningful results difficult and/or expensive.

3. How to test in creator marketing?

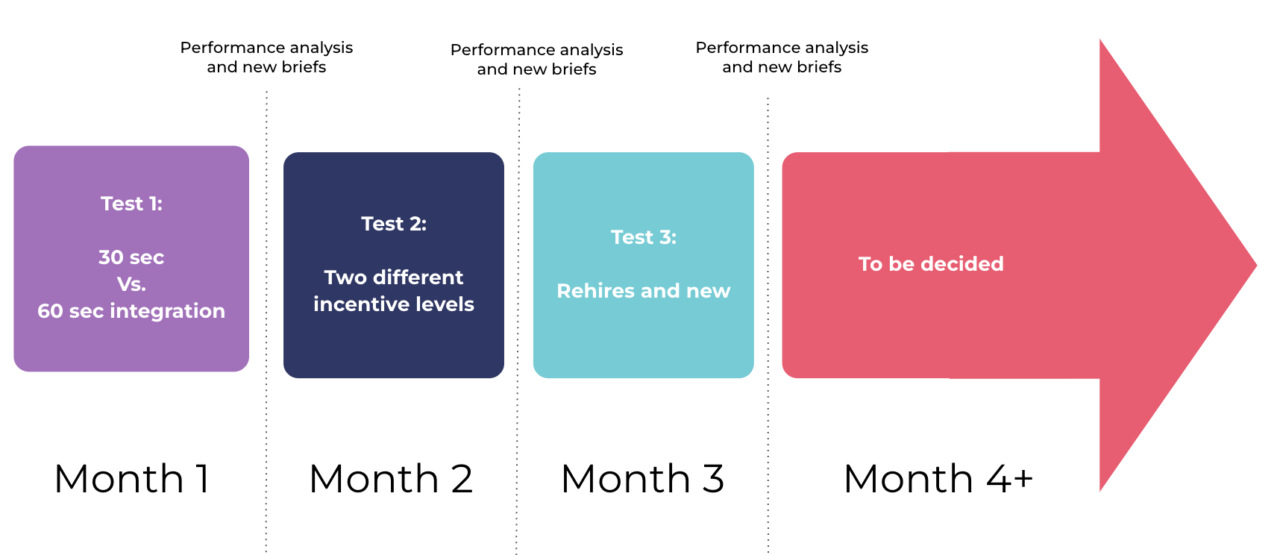

Testing should not be thought of as a one-off experiment. It should be a consistent, ongoing process with a clear framework and a planned timeline.

The best way to go about this is that you first make a few assumptions about what kind of tests might reveal the biggest impacts on performance. You then make an explicit schedule about which tests you will run and when.

Running the campaigns

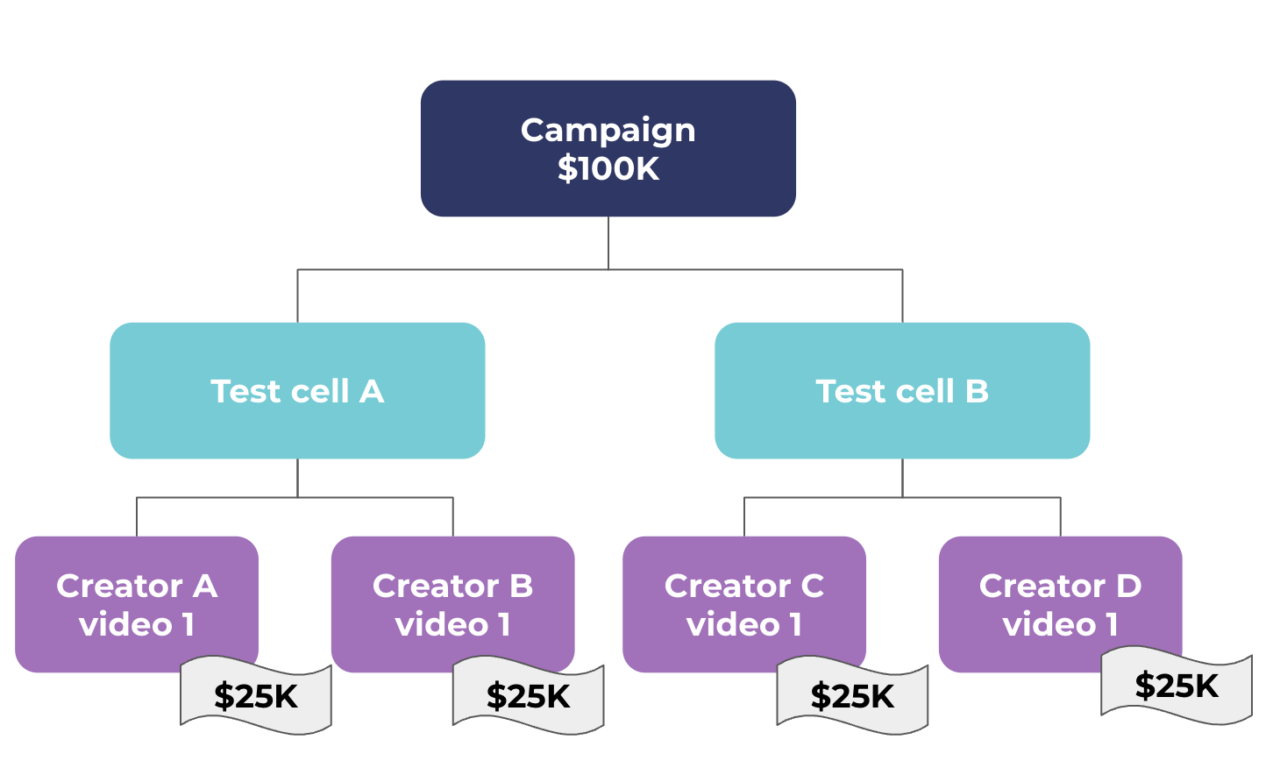

Once you have set this schedule, it’s time to run the actual tests. To run an A/B test, you split your creators into two (or sometimes more) groups. Each group should be expected to deliver roughly the same amount of views in your target audience. Note that there are very good reasons why you should base this on expected views rather than subscriber counts.

Once you’ve run the first test, you then adapt future campaigns based on the results. The goal should be to improve the performance further with each iteration.

Example of a simple test structure. Note that in a real test, you should use a larger number of creators per test cell.

Only test one thing at a time

Another important thing to remember is (in most cases) to only test one thing at a time. This increases the certainty about what actually causes the performance difference. For example, you shouldn’t test two different integration lengths and two different levels of incentives at the same time.

Remember to hire enough creators

You should make sure to hire a large enough number of creators/influencers. This helps make the findings stronger and more generally applicable. If you only hire one or two big creators, there is a high risk that the test results would be due to the audience characteristics of that particular channel – rather than from any changes you made for the test. This would in turn make it harder to understand what truly performs and scales.

Budget considerations

What kind of budgets do you need for A/B testing in influencer marketing? Your budget depends a lot on what your success metrics are. For example, if you are looking for app installs, make sure you have enough budget to generate a meaningful number of video views and installs from each test cell so you can see a difference in performance.

You can use different kinds of statistical significance calculators to estimate the scale required. If we take the example mentioned above where you’d need about 800,000 views per test cell, and your CPM (cost per thousand impressions) price is $20, running a test with two cells would cost you around $32,000.

4. In conclusion

This article is by no means an exhaustive guide to all possible kinds of testing you can do, but it should serve as a starting point. To briefly summarize, the most important thing is to have a clear plan about what you are testing and when. Your goal should be to make testing a consistent process where you constantly adapt future campaigns based on previous results.

To do this kind of testing effectively, at scale, you need the right partner and the right automation. Matchmade’s experts and platform help you to set up consistent testing and improve results with a minimal amount of manual work. If you are interested in learning more about how we can help you with creator marketing, get in touch with our team.

Want to learn more about creator marketing?

To learn more about the creator industry, how to get started, how to scale influencers as a channel, and much more, download our ebook below.